In the era of big data, high concurrency of databases has become the bottleneck of the development of data systems. Database optimization is imperative. Let's introduce several common database optimization methods.

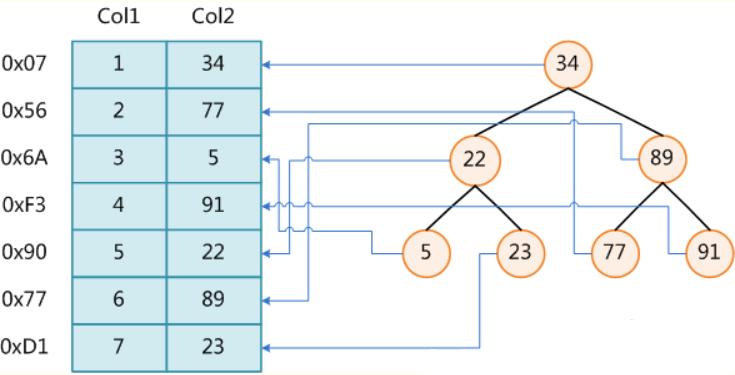

1. Index

Establishing indexes is the lowest cost and fastest effective solution for database optimization. This solution has a good effect on the improvement of the database. There are less complicated table associations. But the operation efficiency of SQL can be improved.

2. Sub-library, Sub-table and Sub-partition

Sub-library can be used according to business. Shunt database concurrency pressure. In this way, the database will be more organized and hierarchical. For users, it is easier to find the data they need. As long as they know the topic they need to look for, they can look for data in the corresponding library.

The index is suitable for dealing with millions of data. Tens of millions of levels of data are well-used. They can be managed. If the amount of data is hundreds of millions of levels, the index will lose its advantages. A single index file may be hundreds of megabytes or more. The appearance of sub-tables has solved such problems.

The implementation principle of partition is the same as that of partition table. It puts the data of corresponding rules together. The only difference is that the partition only needs to set the partition rules. The inserted data will be inserted into the designated zone. When querying, you can query the required areas. It is equivalent to make the sub-tables transparent to the outside world. The cross-table database helped us merge and deal with it. This makes it more convenient to use the score table. But partitions have their own problems. There is an upper limit on concurrent access to each database table. Sub-tables can resist high concurrency. And partitions cannot. As for the choice of separate tables or partitions, it needs to be treated on a case-by-case basis.

3. Database Engine

The database engine is to solve the problems of large amount of data and slow consultation speed. It is a way to optimize the database.

4. Pretreatment

Real-time data (data of the day) is limited. The real mount of data is large is historical data. If queries based on large table historical data involve some large table associations, this SQL is difficult to optimize.

5. Separation of Reading and Writing

In the case of large concurrency of databases, the best practice is to scale out. You can add servers to improve the ability to process data to improve the ability. But it has the function of data backup. This not only processes data, but also is not easy to lose.

The above briefly introduces several common database optimization methods. These are all commonly used optimization methods in real networks. I hope it can help you.