Despite having complex sensor arrangements and thousands of hours of research and development poured into them, self-driving cars and autopilot systems can be gamed with consumer hardware and 2D image projections.

Researchers at Ben-Gurion University of the Negev in Israel , were able to trick self-driving cars — including a Tesla Model X — into braking and taking evasive action to avoid “ depthless phantom objects .”

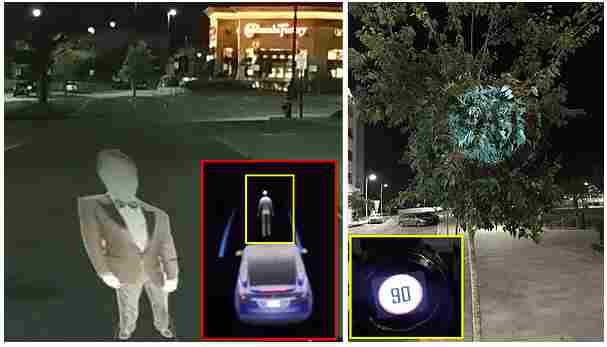

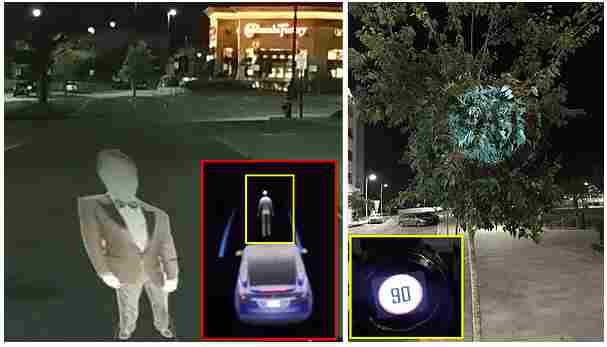

The image below demonstrates how two-dimensional image projections tricked a Tesla’s Autopilot system into thinking there was a person stood in the road. To the human eye, it’s clear that this is a hologram of sorts, and wouldn’t pose a physical threat, but the car perceives it otherwise.

This technique of phantom image projections can also be used to trick autopilot systems in to “thinking” any number of objects lay in the road ahead, including cars, trucks, people, and motorcycles.

Researchers were even able to trick the system’s speed limit warning features with a phantom road sign.

Using phantom images, researchers were able to get Tesla’s Autopilot system to brake suddenly. They even managed to get the Tesla to deviate from its lane by projecting new road markings onto the tarmac.

Take a look at the outcome of the researcher‘s experiments in their video below.

Drone attacks

What’s more terrifying, though, is that “phantom image attacks” can be carried out remotely, from a distance, using drones or by hacking video billboards.

In some cases, the phantom image could appear and disappear faster than the human eye can detect or before a person notices. The image could still be acknowledged by the powerful high-speed sensors used in autopilot systems, though.

By attaching a mini-projector to the underside of a drone, researchers projected a phantom speed limit sign onto a nearby building. Even though the sign was visible for just a split-second, it was enough to trick the autopilot system.

A similar strategy can be carried out by hacking video billboards that face the road. By injecting phantom road signs, or images, into a video advert, autopilot systems can be tricked into changing speed.

Is this the future?

It all sounds painfully dystopian. Picture it now.

It’s 2035, you’ve just been forced by the government to buy an electric car because everything else is banned. You’re cruising to work on autopilot, and all of a sudden a drone appears and projects new road markings which cause your car to veer into oncoming traffic. At best, you spill your coffee.

Of course, this scenario assumes that the “phantom attack” issue is never resolved.

Thankfully, the researchers declared their findings to Tesla and the makers of other autopilot systems they tested. Hopefully fixes are on their way, before more people figure out how to carry out these attacks.

Indeed, the researchers themselves were able to train a model that can accurately detect phantoms by examining the image‘s context, reflected life, and surface. It can be overcome.

But right now, it seems that self-driving systems respond to phantom images as a “better safe than sorry” policy. Which is fine, so long as autopilot drivers pay attention , too.